Inspired by the Summon result click stats that Matthew Reidsma has extracted (and, to be honest, I find myself being regularly inspired by what Matthew’s doing!), I’ve started tracking the clicks on our Summon instance too.

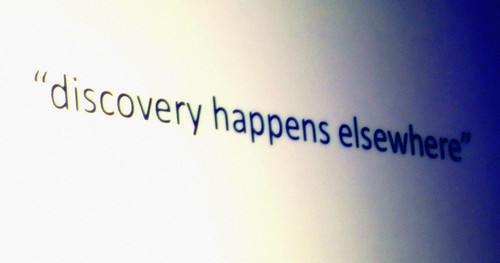

Anyone who’s had the misfortune to hear me present recently will know I’ve been waffling on about the importance of making e-resources easy to use and painless to access, and the fact that most of us are biologically programmed to follow the easiest route to information…

…an information [seeker] will tend to use the most convenient search method, inthe least exacting mode available.Information seeking behaviour stops assoon as minimally acceptable results are found.

Wikipedia, Principle of least effort

Why will our students not get up and walk ahundred meters to access a key journal article in the library? … the overwhelmingpropensity of most people is to invest as absolutely little effort into information seeking as they possibly can.

Prof Marcia J. Bates, “Toward an Integrated Model of Information Seeking & Searching” (2002)

…numerous studies have shown users areoften willing to sacrifice informationquality for accessibility. This fast food approach to information consumption drives librarians crazy. “Our information is healthier and tastes better too” they shout. But nobody listens. We’re too busy Googling.

Peter Morville, “Ambient Findability” (O’Reilly 2005)

As early as 2004, in a focus group for one of my research studies, a collegefreshman bemoaned, “Why is Googleso easy and the library so hard?”

Carol Tenopir, “Visualize the Perfect Search” (Library Journal 2009)

The present findings indicated that the principle of least effort prevailed in the respondents’ selection and use of information sources.

Liu & Yang, “Factors Influencing Distance-Education Graduate Students’ Use of Information Sources: A User Study” (2004)

People do not just use information that is easy to find; they even use information that they know to be of poor quality and less reliable — so long as it requires little effort to find — rather than using information they know to be of high quality and reliable, though harder to find.

Jason Vaughan, “Web Scale Discovery Services” (ALA TechSource 2011)

If you’re looking at Discovery Services, demand a trial and don’t get distracted by how many options the advanced search page has, how well it handles complex Boolean queries, or how many obscure specialist subject headings it supports — to misquote Obi-Wan Kenobi, “these are not the features you are looking for”. The real questions you should be asking are:

- Can students use the skills they’ve already picked up from a lifetime of searching Google to use this thing?

- If I pluck 2 or 3 vaguely relevant keywords out of the air and type them in (possibly misspelling them), do I get useful and relevant results?

- If I choose some slightly more carefully considered keywords, are the first 5 results on the first page all relevant?

- Does the interface look uncluttered, straightforward to use and, if I wanted to, is it obvious how to refine the search?

- Does this product work with EZProxy (or similar) to provide easy off-campus access to articles?

…in fact, and please don’t take this wrong way, you’re possibly not the best person to be answering some of those questions as your neural pathways have been severely damaged by years of using poorly designed journal database interfaces and you have an unhealthy (bordering on the sexually perverse) obsession with “advanced” search pages 😉

Instead, grab some of your newest students (ideally ones who look blankly at you when you ask them if they know what a Boolean operators is) and let them play with it — the more Information Illiterate they are, the better! Treat their comments as pearls of wisdom (“out of the mouth of babes…”) and try to see the library’s e-resource world through their eyes for what it really is: a scary alien landscape of weird library terminology, perplexing login screens, and unnecessary friction at every turn. Above all, never forget that “Libraries are a cruel mistress“!

Matt Borg nicely summed up the above when he cheekily said (and apologies for paraphrasing you, Matt!)…

The trouble with Summon is that students don’t need to be taught how to use it, but librarians do

In other words, you shouldn’t have to be an Information Professional to use a Discovery Service and you don’t have to become a mini-librarian just in order to figure out how the damn thing works. If the interface looks comfortable and familiar to you, it’s probably been designed for librarians to use and will the scare the bejebus out of most of your students. Swallow hard, gird your loins and remember that you’re not buying this product to make your life easier (although chances are it will), you’re buying it to make life easier for your users.

Or, to put it another way, if a Discovery Service looks like a journal database and acts like a journal database, then it probably is a journal database and not a Discovery Service. There’s a very good reason Summon looks more like Google and less like like <insert name of your favourite database here> 😀

(If your idea of a “good time” is to scare undergraduates in training sessions by showing them journal database interfaces — “it’s OK, I’m a friendly librarian and I’m here to show you just how hard it can be to find an article!” — then it’s probably high time you sought medical counselling ;-))

OK, so why am I ranting on about all this stuff? It’s simply because I’ve been pulling out some usage stats from our Summon instance…

- The library’s print collection accounts for just 0.3% of the items, but accounts for 10.3% of the result clicks — I think our users are trying to tell us that they think our OPAC sucks and they’d rather use Summon to search for books

- 89% of the results clicked on appeared on the first page of results — as with Google, users rarely delve any further the page 1 of the results

- Only 2% of result clicks came from beyond the 4th page of the results — very few users will explore the long tail of results

- 50.5% of result clicks were for the first 4 results on page 1 — the majority of users won’t even bother to scroll down the page!

- 72.3% of searches used 3 keywords or less — students are using their Google skills

- Since launching Summon, we’ve seen increases of 300% to 1000% in the COUNTER full-text download stats for many of the journal platforms we subscribe to — although “cost per use” can be a crude measure, we’re getting much better value out of our e-resource subscriptions now

All of the above tells me that Summon is doing all the things we originally bought it for and that the relevancy ranking is schmokin’!

“Yes”, there’s still a place for Information Literacy in all of this, and, “yes”, we need to be able to support researchers and Boolean Buffs, but the majority of students just want to whack in a few keywords and quickly find something that’s relevant — if you select a product that allows them to do just that, they will come 🙂