Almost 2 years ago, 729 of you generously took time to fill out a survey about OPACs (archived here). You can find a selection of blog posts about the results here.

I’m pleased to say that a follow-up survey is now being conducted by Bowker and I’d encourage as many of you as possible to fill it in. For every 100 responses, Bowker will donate a gift of schoolbooks to deprived children via the Oxfam Unwrapped scheme 🙂

I think in 2007 we managed to gather enough statistical evidence to say “OPACs suck” and it’ll be interesting to see how much has changed in the last couple of years! Quite a few of you were hoping to implement new features in your OPACs …did it happen? …did those features meet your expectations?

Category: OPAC Survey

OPAC Survey – Q2c – OPAC Envy

Question 2 – Cutting Edge or Yesterday’s News

Q2c) Have you ever suffered from “OPAC envy” when looking at someone else’s OPAC?

“yes”: 565 (77.5%)

“no”: 151 (20.7%)

no response: 13 (1.8%)

response vs percentage of respondents:

Continue reading “OPAC Survey – Q2c – OPAC Envy”

OPAC Survey – Q2b – OPAC Age

Question 2 – Cutting Edge or Yesterday’s News

Q2b) If “2007” represents a cutting edge OPAC with all the features both you and your users would expect, how far in the past do you feel your current OPAC is?

mode response: 2005

number of respondents: 724

Although the most common response was 2005, the majority (56%) of respondents picked 2002 or earlier.

OPAC Survey – Q2a – Meeting User Needs and Expectations

Question 2 – Cutting Edge or Yesterday’s News

Q2a) On a scale of 1 to 10, how well do you think your OPAC meets the needs and expectations of your users?

average response out of 10: 4.56

mode response out of 10: 3

number of respondents: 727

More worrying than the average response, the most common response was just 3 out of 10!

response out of 10 vs number of respondents:

Continue reading “OPAC Survey – Q2a – Meeting User Needs and Expectations”

OPAC Survey – Q1 – Overall Happiness

Question 1 – OPAC Happiness

Q1a) On a scale of 1 to 10 (where 1 is extremely unhappy and 10 is extremely happy), how happy are you with your OPAC?

average response out of 10: 5.18

mode response out of 10: 7

number of respondents: 729

response out of 10 vs number of respondents:

Continue reading “OPAC Survey – Q1 – Overall Happiness”

OPAC Survey – Final Results

If you’ve come to this page via the CILIP Update article, please note that the published article should have been credited to “Dave Pattern, Lynn Stevens, and Lisa Balman”. The article was very much a collaborative effort by all three of us, and I’m deeply indebted to Lynn and Lisa for their help.

I suspect the error in attribution was a genuine oversight by the hardworking staff at Update, and hopefully they’ll be able to include an erratum in the next edition.

OPAC Survey – Final Results

After releasing a large chunk of the results in May and June, I did promise to put together a full report of the findings. Unfortunately, as so often happens, life (and work) got in the way.

So, I’ve decided to try and make things easier for myself by releasing the results for each question separately as individual blog posts (partly inspired by how Meredith Farkas handled the recent “Survey of the Biblioblogosphere”).

This blog post will act as a growing index of links, so you might want to bookmark it!

Links (this section will grow)

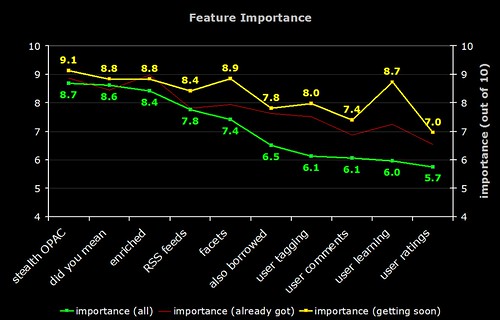

OPAC Survey results – part 8

Library and Information Show 2007

I’ve just about recovered from LiS 2007 and hopefully I didn’t cram too much into the 30 minutes I had for my session!

There’s a few photos from the event on Flickr…

OPAC Survey

I think the previous post will be the last batch of graphs unless I uncover something new in the data. Instead, many thanks to my colleague Lisa (Senior Assistant Librarian for Applied Sciences and Computing & Engineering) for sifting through the respondent comments for the common themes. The full figures will be in the report, but here’s a snifter of the “feature comments”…

The most common wanted feature was federated searching (both electronic resources and other libraries’ holdings), with over 30 responses.

Next up, being able to customise the OPAC (look & feel and adding new features), with over 15 comments.

Relevancy ranking was specifically mentioned by at least 10 respondents, with 9 wanting personal borrowing histories, Amazon style wish lists, and saved session results.

1 respondent wanted to be able to search by colour of cover and another wanted wiki style functionality adding to the OPAC.

For the “final comments” section, here’s a sample…

10 respondents felt that “2.0” style features could be a distraction and that the OPAC should be kept simple. Another 10 said that lack of staff expertise or money was stopping them from being able to make the changes they would like to their OPACs.

However, the most common theme was laying the blame squarely at the feet of the vendors — broken promises, slowness of feature delivery, etc.

Lisa also picked out a handful of the comments that she felt stood out and here’s just 3 of them…

It seems that OPACs are too busy trying to please every customer. They spend too much time developing and not nearly enough time releasing software. The ability of the library to customize the OPAC should be the primary feature. Developing extensive APIs and building in flexibility should be at the top of the list for development. Let librarians develop the specific tools they want or need. Allow librarians to create add-ons and templates and distribute them to other libraries. OPAC vendors need to concentrate on search technology and not the next great feature.

I think we should always keep in mind the key aim of the OPAC is so users can locate/access resources. These new web 2.0 type enhancements can be fantastic, but there is a danger in using them just because we think we should.

We took a risk in 2002 and extensively customized our OPAC outside the confines of vendor support. Our efforts have been extremely successful and it has essentially become our new model for the direction we’re heading in the future. We’ll be doing MORE customization and application development in the future, rather than less. We’re much more likely now to move away from traditional library automation vendors as we consider what comes next.

(Phil Feilmeyer, Hennepin County Library)

OPAC Survey results – part 7

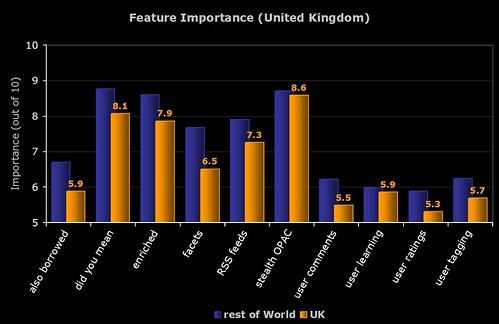

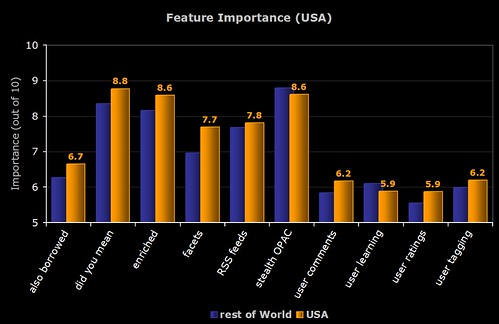

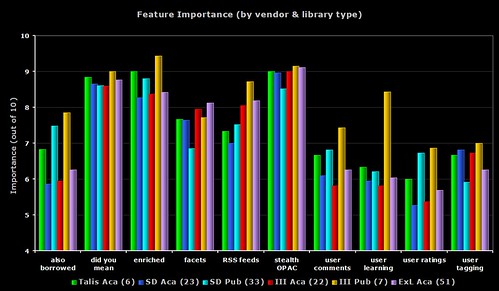

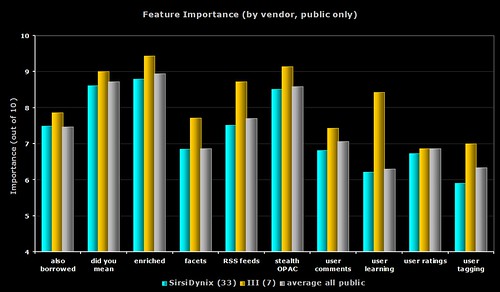

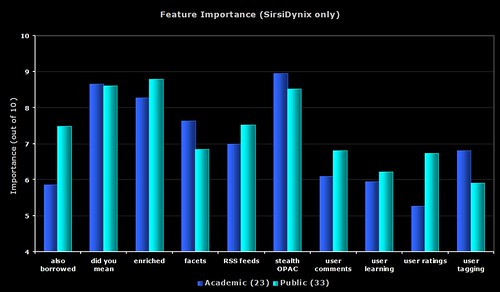

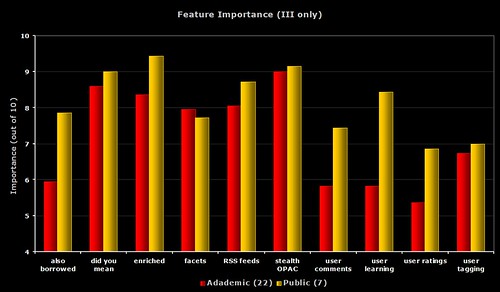

Yesterday, Owen Stephens asked for more details about feature importance breakdowns for each vendor by type of library.

As a caveat, please take into account the number of respondents for each group:

Talis – 6 academic

SirsiDynix – 23 academic & 33 public

III – 22 academic & 7 public

Ex Libris – 51 academic

That leaves 46 other academic and 35 other public respondents who either use a system from another supplier, or didn’t state which vendor or system they used. Their responses are included as part of the grey average bars in some of the graphs.

I might also attempt a second set of graphs to split out the Unicorn & Dynix/Horizon respondents, and the Aleph & Voyager respondents for each library type.

Finally, as with some of the other graphs, the Y axis doesn’t always start at zero. Personally I prefer to be able to concentrate on the differences in the bar heights and I tend to adjust the Y axis start value accordingly. If you prefer to see the true size of the bars (i.e. with the Y axis starting a zero), please let me know and I’ll upload new graphs.

1) Feature Importance – all 6 groups

A busy graph, but one that does draw out some of the differences between all of the groups.

In 9 out of the 10 features, it’s the seven III public respondents who are giving the highest perceived importance. Only faceted browsing sees the Ex Libris academic respondents getting the highest importance rating.

With the “user learning” (i.e. OPAC that makes personalised recommendations for each user), none of the III public respondents either have the feature or are planning to implement it (even though the regard it as a relatively high importance). Four of the Ex Libris academic respondents are planning to implement the feature soon (does Primo offer this?).

2) Feature Importance – academic only

The same data as the first graph, but this time only showing the academic respondents. I count the six Talis respondents as giving the highest importance rating to 6 of the features, then Ex Libris get 3, and then 1 (user tagging) from SirsiDynix.

The Talis repsondents are all from the UK, so it’s worth remembering that (on average) the 171 UK respondents always gave lower ratings to the features than non-UK respondents. I’m going to go out on a limb here and say that I believe this is down to all of the “2.0” work and promotion Talis have been doing recently.

The grey bar shows the average from all of the 148 academic respondents.

3) Feature Importance – public only

The same data as the first graph, but this time only showing the public respondents. For all 10 features, it’s the seven III respondents who give the highest ratings.

The grey bar shows the average from all of the 75 public respondents.

4) Feature Importance – SirsiDynix only

The same data as the first graph, but this time only showing the SirsiDynix respondents.

5) Feature Importance – III only

The same data as the first graph, but this time only showing the III respondents.

6) Feature Importance – each group compared to average

I’m sure you’re all getting graph fatigue (grafatigue?) now, so I’ll just give you links to the images on Flickr. Each graph shows the response from the group compared with the average from all respondents for that library type.

Talis – academic

SirsiDynix – academic

III – academic

Ex Libris – academic

SirsiDynix – public

III – public

OPAC Survey results – part 6

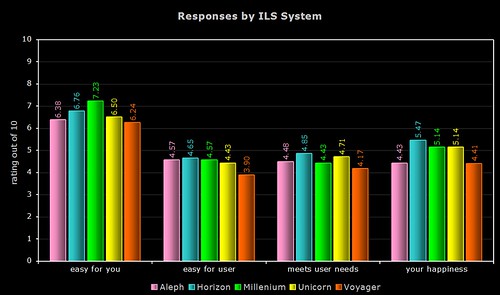

Part way through the survey, I added an optional question to allow respondents to specify which ILS product they use.

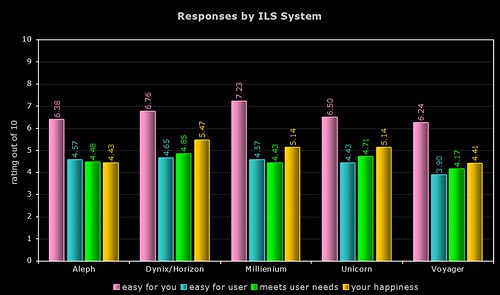

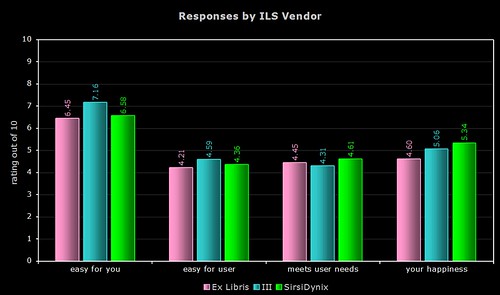

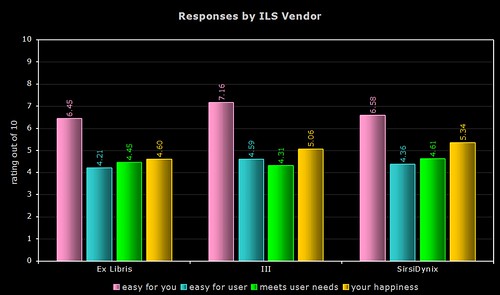

Here’s the breakdown of average responses to the following questions (which all asked the respondents to give a rating out of 10)…

1) easy for you — how easy do you (i.e. the respondent) find your OPAC is to use

2) easy for user — how easy do you think an average user/patron finds your OPAC is to use

3) meets user needs — how well do you think your OPAC meets the needs and expectations of your users

4) your happiness — how happy overall are you with your OPAC

By Product

Quite a few respondents simply listed their vendor (e.g. “SirsiDynix” or “Ex Libris”), so the above two graphs only use data from the responses where the actual system was named. As the Dynix ILS and Horizon use the same OPAC (HIP), I’ve grouped their responses together.

By Vendor

The above two graphs use more raw data (a total of 154 respondents, including the responses where only the vendor was named). As most of you will know, Ex Libris and SirsiDynix support more than one OPAC product.

Comments

I’m not sure that any one system or vendor comes out smelling of roses (or stinking of horse manure!), and I certainly wouldn’t suggest you base your next ILS choice on the results, but here’s a few comments…

1) ease of use for the respondent

Innovative and their Millenium product are the clear winners here, with nearly a gap of 0.99 between them and last place Voyager. Overall, the Ex Libris products get the lowest ratings.

2) ease of use for users/patrons

The Dynix/Horizon OPAC wins by a nose, with a noticeable gap between last place Voyager and the others.

3) meets the needs and expectations of users/patrons

Again, the Dynix/Horizon OPAC wins, with the other SirsiDynix OPAC (Unicorn) coming in second. Once again, Voyager comes last of the five.

4) overall happiness

For the third time, the Dynix/Horizon OPAC wins, with the two Ex Libris products coming last.