I’m very proud to announce that Library Services at the University of Huddersfield has just done something that would have perhaps been unthinkable a few years ago: we’ve just released a major portion of our book circulation and recommendation data under an Open Data Commons/CC0 licence. In total, there’s data for over 80,000 titles derived from a pool of just under 3 million circulation transactions spanning a 13 year period.

https://library.hud.ac.uk/usagedata/

I would like to lay down a challenge to every other library in the world to consider doing the same.

This isn’t about breaching borrower/patron privacy — the data we’ve released is thoroughly aggregated and anonymised. This is about sharing potentially useful data to a much wider community and attaching as few strings as possible.

I’m guessing some of you are thinking: “what use is the data to me?”. Well, possibly of very little use — it’s just a droplet in the ocean of library transactions and it’s only data from one medium-sized new University, somewhere in the north of England. However, if just a small number of other libraries were to release their data as well, we’d be able to begin seeing the wider trends in borrowing.

The data we’ve released essentially comes in two big chunks:

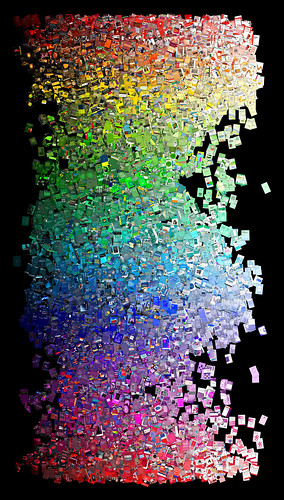

1) Circulation Data

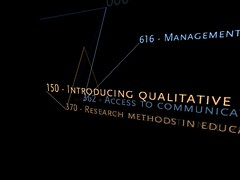

This breaks down the loans by year, by academic school, and by individual academic courses. This data will primarily be of interest to other academic libraries. UK academic libraries may be able to directly compare borrowing by matching up their courses against ours (using the UCAS course codes).

2) Recommendation Data

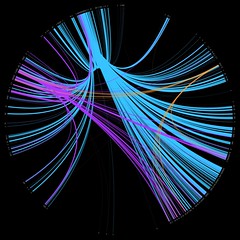

This is the data which drives the “people who borrowed this, also borrowed…” suggestions in our OPAC. This data had previously been exposed as a web service with a non-commercial licence, but is now freely available for you to download. We’ve also included data about the number of times the suggested title was borrowed before, at the same time, or afterwards.

Smaller data files provide further details about our courses, the relevant UCAS course codes, and expended ISBN lookup indexes (many thanks to Tim Spalding for allowing the use of thingISBN data to enable this!).

All of the data is in XML format and, in the coming weeks, I’m intending to create a number of web services and APIs which can be used to fetch subsets of the data.

The clock has been ticking to get all of this done in time for the “Sitting on a gold mine: improving provision and services for learners by aggregating and using learner behaviour data” event, organised by the JISC TILE Project. Therefore, the XML format is fairly simplistic. If you have any comments about the structuring of the data, please let me know.

I mentioned that the data is a subset of our entire circulation data — the criteria for inclusion was that the relevant MARC record must contain an ISBN and borrowing must have been significant. So, you won’t find any titles without ISBNs in the data, nor any books which have only been borrowed a couple of times.

So, this data is just a droplet — a single pixel in a much larger picture.

Now it’s up to you to think about whether or not you can augment this with data from your own library. If you can’t, I want to know what the barriers to sharing are. Then I want to know how we can break down those barriers.

I want you to imagine a world where a first year undergraduate psychology student can run a search on your OPAC and have the results ranked by the most popular titles as borrowed by their peers on similar courses around the globe.

I want you to imagine a book recommendation service that makes Amazon’s look amateurish.

I want you to imagine a collection development tool that can tap into the latest borrowing trends at a regional, national and international level.

Sounds good? Let’s start talking about how we can achieve it.

FAQ (OK, I’m trying to anticipate some of your questions!)

Q. Why are you doing this?

A. We’ve been actively mining circulation data for the benefit of our students since 2005. The “people who borrowed this, also borrowed…” feature in our OPAC has been one of the most successful and popular additions (second only to adding a spellchecker). The JISC TILE Project has been debating the benefits of larger scale aggregations of usage data and we believe that would greatly increase the end benefit to our users. We hope that the release of the data will stimulate a wider debate about the advantages and disadvantages of aggregating usage data.

Q. Why Open Data Commons / CC0?

A. We believe this is currently the most suitable licence to release the data under. Restrictions limit (re)use and we’re keen to see this data used in imaginative ways. In an ideal world, there would be services to harvest the data, crunch it, and then expose it back to the community, but we’re not there yet.

Q. What about borrower privacy?

A. There’s a balance to be struck between safeguarding privacy and allowing usage data to improve our services. It is possible to have both. Data mining is typically about looking for trends — it’s about identifying sizeable groups of users who exhibit similar behaviour, rather than looking for unique combinations of borrowing that might relate to just one individual. Setting a suitable threshold on the minimum group size ensures anonymity.